Below are the IMPORTANT takeaways I have currently from my research, for PostgreSQL Indexes –

1. When deploying New/Modified Indexes to Production Environment, use the CONCURRENTLY option with the CREATE Index command, this will allow “Writes” on the Database, to proceed seamlessly. There are conditions under which this command can be used and needs to be monitored closely during deployment as well, as failure in deployment may result in an invalid index which would need to be removed manually.

2. Indexes with multiple columns in their definition must be used as sparingly as possible. The PostgreSQL Planner uses the Leading column as the Index for the major “Filtering Criteria”, hence the presence of other columns in the definition, though will be used for inequality comparison or for fetching data, is mostly more of a maintenance overhead than a performance benefit.

3. We can design Indexes with a “WHERE CLAUSE” in the Index Definition, called as partial indexes. Saves on both space and time but needs to be used very carefully, only when we are absolutely sure that the condition will either be directly or arithmetically connected to the query, else the index can become a maintenance overhead with no performance benefit.

4. If your workload includes a mix of queries that sometimes involve only column x, sometimes only column y, and sometimes both x and y, you might choose to create two separate indexes on x and y, relying on index combinations to process the queries that use both columns. This would be a better approach than creating a multi-column index with x,y in above mentioned scenario.

5. We can create indexes on expressions of columns, such as (lower (col1)) ;(( first_name || ‘ ‘ || last_name)), etc. Index expressions are relatively expensive to maintain, because the derived expression(s) must be computed for each row upon insertion and whenever it is updated. Indexes on expressions are useful when retrieval speed is more important than insertion and update speed.

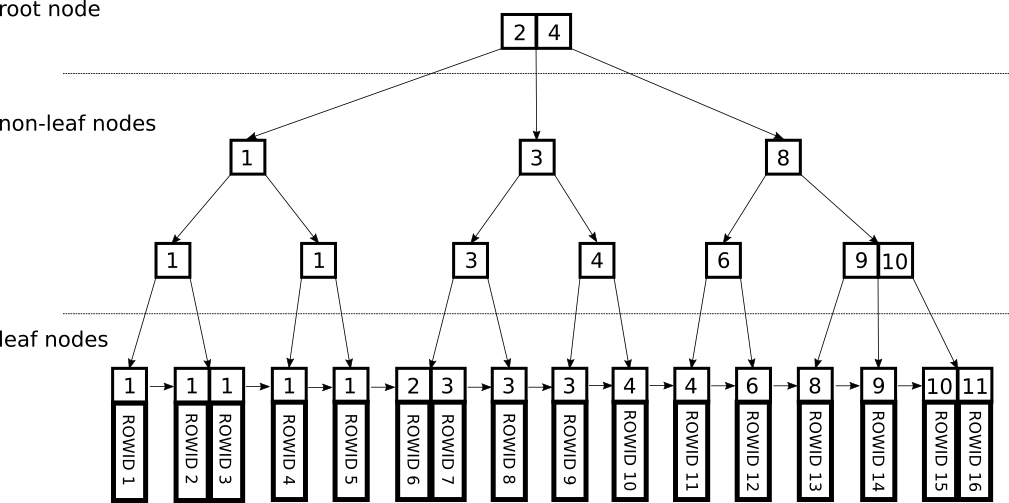

6. PostgreSQL supports index-only scans, which can answer queries from an index alone without any need for random heap access, given that it is primarily a B-tree index and the query must reference only columns stored in the index. It will be a win only if a significant fraction of the table’s heap pages have their all-visible map bits set. But tables in which a large fraction of the rows are unchanging are common enough to make this type of scan very useful in practice.

7. To make effective use of the index-only scan feature, you might choose to create a covering index, which is an index specifically designed to include the columns needed by a particular type of query that you run frequently. Since queries typically need to retrieve more columns than just the ones they search on, PostgreSQL allows you to create an index in which some columns are just “payload” and are not part of the search key. This is done by adding an INCLUDE clause listing the extra columns.

8. It’s wise to be conservative about adding non-key payload columns to an index, especially wide columns. If an index tuple exceeds the maximum size allowed for the index type, data insertion will fail. In any case, non-key columns duplicate data from the index’s table and bloat the size of the index, thus potentially slowing searches. And remember that there is little point in including payload columns in an index unless the table changes slowly enough that an index-only scan is likely to not need to access the heap. If the heap tuple must be visited anyway, it costs nothing more to get the column’s value from there.

9. Suffix truncation removes non-key columns from upper B-Tree levels. As payload columns, they are never used to guide index scans. The truncation process also removes one or more trailing key column(s) when the remaining prefix of key column(s) happens to be sufficient to describe tuples on the lowest B-Tree level. In practice, covering indexes without an INCLUDE clause often avoid storing columns that are effectively payload in the upper levels. However, explicitly defining payload columns as non-key columns reliably keeps the tuples in upper levels small.

10. In principle, index-only scans can be used with expression indexes. However, PostgreSQL’s planner is currently not very smart about such cases. It considers a query to be potentially executable by index-only scan only when all columns needed by the query are available from the index. For example, for a query searching on f(x), x is not needed except in the context f(x), but the planner does not notice that and concludes that an index-only scan is not possible. If an index-only scan seems sufficiently worthwhile, this can be worked around by adding x as an included column. Partial indexes also have support index-only scans.

11. An index can support only one collation per index column. If multiple collations are of interest, multiple indexes may be needed. The index automatically uses the collation of the underlying column.

12. Always run ANALYZE first before examining index usage. This command collects statistics about the distribution of the values in the table. This information is required to estimate the number of rows returned by a query, which is needed by the planner to assign realistic costs to each possible query plan. In absence of any real statistics, some default values are assumed, which are almost certain to be inaccurate.

13. It is fatal to use very small test data sets to check index usage. While selecting 1000 out of 100000 rows could be a candidate for an index, selecting 1 out of 100 rows will hardly be, because the 100 rows probably fit within a single disk page, and there is no plan that can beat sequentially fetching 1 disk page.

That completes my look at Indexes in PostgreSQL in this series. Next Series on PostgreSQL Performance.